Introduction:

Ethernet VPN (EVPN) and Virtual Extensible LAN (VXLAN) are two technologies that have gained significant popularity in recent years due to their ability to provide scalable, efficient, and secure connectivity in modern data center networks. EVPN is a type of VPN that uses the BGP protocol to exchange MAC addresses between routers, while VXLAN is a network virtualization technology that encapsulates Layer 2 Ethernet frames in Layer 3 UDP packets to extend Layer 2 segments over a Layer 3 network.

Together, EVPN and VXLAN can be used to create a highly scalable and flexible network that can support a variety of workloads and applications. However, deploying EVPN and VXLAN can be challenging, especially when it comes to ensuring security and reliability.

This article describes a secure EVPN VXLAN solution that addresses these challenges and provides step-by-step instructions on how to configure it. The solution leverages Fortinet's FortiGate Next Generation Firewalls and provides security and control over network traffic and data content. The solution supports high availability and interoperability with existing network infrastructure.

Up until now security of the EVPN VXLAN solution has not been possible at scale in most cases due to the performance burden placed on network security devices as well as the lack of RFC 8365 support by the existing firewall vendors. With the release of FortiOS 7.4 by Fortinet, you are now able to take advantage of hardware accelerated VXLAN and EVPN control plane integration.

The big advantage of EVPN integration rather than basic VXLAN inspection is the ability to steer traffic through the firewall as needed rather than having to pass all traffic through the firewall. EVPN integration means that with a simple configuration change in the network, you can attract traffic through the firewall for inspection.

This article is divided into 3 sections:

Solution Overview

Implementation

Test and verification

Let's dive in.

Solution Overview:

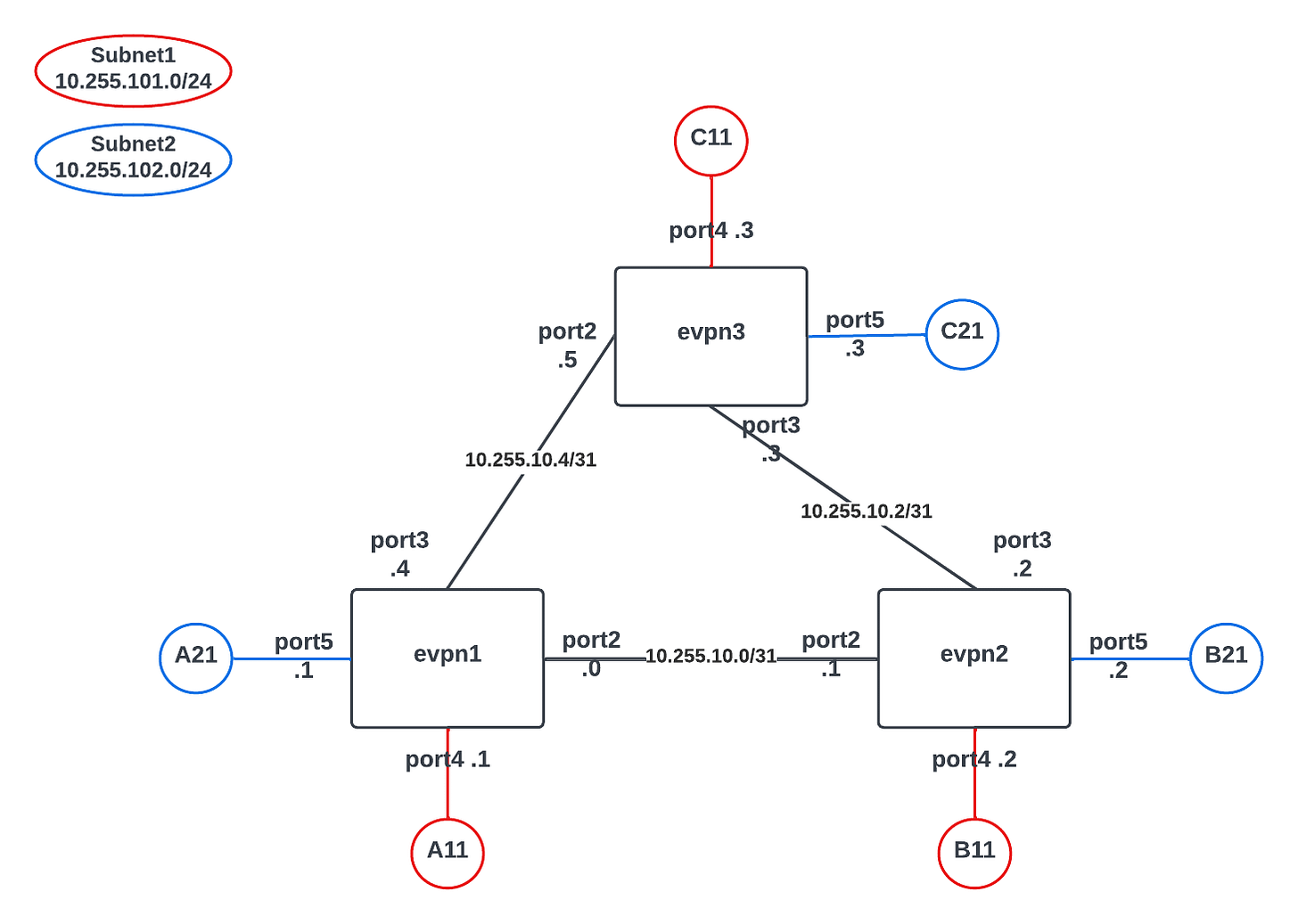

Below is a diagram of the environment we'll be using in this example. We will leverage 3x FortiGate VMs running FortiOS version 7.4.0. Each FortiGate will have 5 interfaces: port1 for management, port2 and port3 for VXLAN transport, port4 and port5 for LAN segments.

Each circle in the diagram indicates a Linux container host (LXC) and each FortiGate (FGT) protects 1 LXC for each of the 2 LAN ports. These LXC systems will be used to verify communication across the fabric.

The FGTs communicate over layer 3 using /31 subnets where they will establish IBGP sessions to exchange loopback and MAC address information.

Implementation:

We will not be covering the setup of the FGT VMs or the LXCs in this article. If that is something you need help with, then leave a comment and I'll see if there is enough demand for a separate article for this. We will show the configuration for just one of the FGTs but it will be identical for all 3 other than setting the IP addresses and hostnames correctly.

The first thing I like to do is set my hostnames, timezone and in the lab set the admin timeout to 480 (8hrs).

config system global

set admintimeout 480

set hostname "evpn1"

set timezone 12

endNext, let's setup our interfaces. I am using port1 for my management and like to put it into a separate VRF. This isn't required but can help to avoid routing issues. The physical ports were described above and we add a loopback interface for the VXLAN tunnels. Note that the below configuration is additive to the defaults, so there are some things like "allowaccess" on port1 that are already in the default config and so they are explicitly called out here. This helps to reduce the clutter.

config system interface

edit "port1"

set mode static

set vrf 31

set description "Out of Band Management"

set ip 172.16.100.221 255.255.255.0

next

edit "port2"

set ip 10.255.10.0 255.255.255.254

set description "FG2 Connection"

set allowaccess ping

next

edit "port3"

set ip 10.255.10.4 255.255.255.254

set description "FG3 Connection"

set allowaccess ping

next

edit "loopback1"

set vdom "root"

set ip 10.255.20.1 255.255.255.255

set allowaccess ping https ssh http

set type loopback

next

endDefine your static route for management purposes if needed. BGP will take care of the other routes needed in this design.

config router static

edit 100

set gateway 172.16.100.1

set device "port1"

next

endLet's define the EVPN instances with their ID in the "edit" statement. Included in the configuration is the route-distinguisher and route targets for each. We enable local learning and arp-suppression to minimize the traffic across this EVPN fabric.

config system evpn

edit 1000

set rd "1000:1000"

set import-rt "1:1"

set export-rt "1:1"

set ip-local-learning enable

set arp-suppression enable

next

edit 2000

set rd "2000:2000"

set import-rt "2:2"

set export-rt "2:2"

set ip-local-learning enable

set arp-suppression enable

next

endNow we want to define our VXLAN instances. In this scenario we'll have 2 and we will tie them both to the loopback1 interface that was previously created. Each VXLAN instance will need a different VNI. If you don't configure the EVPN piece first, then you will have to set a remote-ip even though it isn't used. This is because VXLAN could be used statically instead of dynamically as in this scenario. Since we have defined EVPN already, we can just reference it here with "evpn-id" instead of using the remote-ip.

config system vxlan

edit "vxlan1"

set interface "loopback1"

set vni 1000

set evpn-id 1000

set learn-from-traffic enable

next

edit "vxlan2"

set interface "loopback1"

set vni 2000

set evpn-id 2000

set learn-from-traffic enable

next

endAfter you define the VXLAN instances, FortiOS dynamically created an entry of the same name in the interfaces hierarchy. Now we can use that interface and combine it with our physical port into a switch-interface with the below configuration. We do this twice, once for each VXLAN instance. When deploying on an NP7 based FGT, it is important that you set the intra-switch-policy to explicit so the NP7 can hardware-accelerate the traffic. Normally a "switch-interface" setup is CPU bound and not hardware accelerated, but in the case of the NP7 platform and this specific configuration, it is hardware accelerated. In the case of the VM setup we have here, there is no hardware acceleration but it still works the same.

The purpose of the "intra-switch-policy" is to let the FGT know whether traffic is allowed by default between interfaces within the switch (implicit) or whether it is subject to firewall policy (explicit).

config system switch-interface

edit "sw1"

set vdom "root"

set member "port4" "vxlan1"

set intra-switch-policy explicit

next

edit "sw2"

set vdom "root"

set member "port5" "vxlan2"

set intra-switch-policy explicit

next

endSimilar to the VXLAN interface creation, when we create the "switch-interface" FortiOS dynamically creates an entry of the same name in the interfaces hierarchy. These interfaces will be Layer 3 so we need to add some IP addressing to them.

config system interface

edit "sw1"

set vdom "root"

set ip 10.255.101.1 255.255.255.0

set allowaccess ping

set type switch

next

edit "sw2"

set vdom "root"

set ip 10.255.102.1 255.255.255.0

set allowaccess ping

set type switch

next

endA DHCP server is not required for this setup but may be desired. We will create a service object for DHCP traffic and apply it in a firewall rule to prevent DHCP traffic from traversing the fabric. This way, each FGT provides DHCP to the local devices and sets its own interface as the gateway. Everything would work if you just had one interface and gateway for all devices in the fabric but doing this optimizes things a bit. If you decide not to run a DHCP server, it might still be good to include these firewall configurations in the event that a server is introduced later. In any event, you will need a firewall rule to allow traffic within and across the fabric which is our second rule below.

config firewall service custom

edit "DHCP-Discover"

set udp-portrange 67:68

next

end

config firewall policy

edit 1

set srcintf "any"

set dstintf "any"

set srcaddr "all"

set dstaddr "all"

set schedule "always"

set service "DHCP-Discover"

set logtraffic all

next

edit 2

set srcintf "any"

set dstintf "any"

set action accept

set srcaddr "all"

set dstaddr "all"

set schedule "always"

set service "ALL"

next

endSo far, we've defined the VXLAN, tied it to a "physical" port via a switch-interface and set an IP on it. We also setup the EVPN instances and referenced them in the VXLAN configuration.

The final piece is to configure BGP to share all this goodness with the rest of the fabric. There is nothing that special here in the BGP configuration. In fact, this may be too much configuration and you should experiment to see which pieces you can remove. I was a little lazy here and just copied the configuration over from the docs in its entirety. One thing to note is that I did try using neighbor-groups with this solution and it did NOT work for me. I tested on beta code so take some time and experiment yourself to see if you can get it working.

config router bgp

set as 65001

set router-id 10.255.20.1

set ibgp-multipath enable

set recursive-next-hop enable

set graceful-restart enable

config neighbor

edit 10.255.10.1

set ebgp-enforce-multihop enable

set next-hop-self enable

set next-hop-self-vpnv4 enable

set soft-reconfiguration enable

set remote-as 65001

next

edit 10.255.10.5

set ebgp-enforce-multihop enable

set next-hop-self enable

set next-hop-self-vpnv4 enable

set soft-reconfiguration enable

set remote-as 65001

next

end

config network

edit 1

set prefix 10.255.20.1 255.255.255.255

next

end

endTest and Verification:

Test and verification is pretty simple here. Fire up your LXC or another host connected to port4 or port5 of one of the FGTs and ping across to another host behind one of the other FGTs. Just be sure that your VM or physical network does not allow communication of these hosts without going through the FGT.

A-11:~# ping 10.255.101.21

PING 10.255.101.21 (10.255.101.21): 56 data bytes

64 bytes from 10.255.101.21: seq=2 ttl=64 time=0.601 ms

64 bytes from 10.255.101.21: seq=3 ttl=64 time=0.467 ms

64 bytes from 10.255.101.21: seq=4 ttl=64 time=0.499 ms

^C

--- 10.255.101.21 ping statistics ---

5 packets transmitted, 3 packets received, 40% packet loss

round-trip min/avg/max = 0.467/0.522/0.601 msNotice that we lost the first two packets while the EVPN fabric learned the MAC addresses of the two hosts and where they live. If we do that again, we'll see that we don't lose any traffic because the MACs are already cached by the FGTs.

A-11:~# ping 10.255.101.21

PING 10.255.101.21 (10.255.101.21): 56 data bytes

64 bytes from 10.255.101.21: seq=0 ttl=64 time=0.625 ms

64 bytes from 10.255.101.21: seq=1 ttl=64 time=0.507 ms

64 bytes from 10.255.101.21: seq=2 ttl=64 time=0.733 ms

^C

--- 10.255.101.21 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.507/0.621/0.733 ms

A-11:~# ip addr

………………….

2: eth0@if157: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP qlen 1000

link/ether 72:a1:63:cb:52:5e brd ff:ff:ff:ff:ff:ff

inet 10.255.101.11/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::70a1:63ff:fecb:525e/64 scope link

valid_lft forever preferred_lft forever

B-11:~# ip addr

………………….

2: eth0@if158: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP qlen 1000

link/ether 4e:9e:9e:84:e0:43 brd ff:ff:ff:ff:ff:ff

inet 10.255.101.21/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::4c9e:9eff:fe84:e043/64 scope link

valid_lft forever preferred_lft forever

Let's take a look on the FGT to view how it sees things.

evpn1 # diagnose sys vxlan fdb list vxlan1

mac=00:00:00:00:00:00 state=0x0082 remote_ip=10.255.20.2 port=4789 vni=1000 ifindex=0

mac=00:00:00:00:00:00 state=0x0082 remote_ip=10.255.20.3 port=4789 vni=1000 ifindex=0

mac=4e:9e:9e:84:e0:43 state=0x0082 remote_ip=10.255.20.2 port=4789 vni=1000 ifindex=0

evpn1 # diagnose netlink brctl name host sw1

show bridge control interface sw1 host.

fdb: hash size=32768, used=5, num=5, depth=1, gc_time=4, ageing_time=3, arp-suppress

Bridge sw1 host table

port no device devname mac addr ttl attributes

2 17 vxlan1 00:00:00:00:00:00 28 Hit(28)

2 17 vxlan1 4e:9e:9e:84:e0:43 134 Hit(134)

1 6 port4 72:a1:63:cb:52:5e 134 Hit(134)

2 17 vxlan1 66:b6:53:c7:02:2b 0 Local Static

1 6 port4 da:30:47:3f:bd:3d 0 Local Static

evpn1 # get l2vpn evpn table

EVPN instance 2000

Broadcast domain VNI 2000 TAGID 0

EVPN PEER table:

VNI Remote Addr Binded Address

2000 10.255.20.3 10.255.20.3

2000 10.255.20.2 10.255.20.2

EVPN instance 1000

Broadcast domain VNI 1000 TAGID 0

EVPN MAC table:

MAC VNI Remote Addr Binded Address

4e:9e:9e:84:e0:43 1000 10.255.20.2 -

EVPN PEER table:

VNI Remote Addr Binded Address

1000 10.255.20.3 10.255.20.3

1000 10.255.20.2 10.255.20.2

There are many more "show" commands to do on the FGT depending on what you want to see but this should give you a good start to verify things are working properly.

If you want to add the DHCP server, be sure to change the default gateway at each FGT to point to the local interface instead of having the same gateway across the fabric.

One thing to know about building firewall policy with this setup is that if you have traffic coming from Subnet1/VXLAN1 to Subnet2/VXLAN2, then you need to build the policy on the originating FGT with a source interface of "sw1" and destination interface of "sw2". On the destination FGT you would use source interface VXLAN2 and destination interface port5. Policies that have traffic staying within the subnet stick to the "VXLAN" and "port/VLAN" type interfaces.

Conclusion:

I hope this was helpful to demonstrate this new EVPN feature set and how it could be used to span a subnet/VLAN across a layer 3 network. Thank you for reading this far and let me know if you have any questions or feedback to provide.

Hi,

Thank you for the article. Would it be possible to run the gateway of a vlan on the Fortigate and use vxlan to populate it to a switch.

Senario:

Fortigate ->switch (running vxlan) -> client

Thank you in advance!